My AI Toolkit - general AI tools am I actually using

What works for me, for what tasks, and why.

I love articles that gives me a peek into what tools people are actually using - not just what they recommend, but what AI tools they’re using for specific tasks, and what their real experience has been.

Lately at workshops and trainings, more and more people have been asking me: what do I use, for what, and how?

I’ve been working on this article for a while. The insane pace of change means the tools keep evolving - I kept having to rewrite parts because a new model would drop that fundamentally changed how I was using something. But at some point you have to let go of the constant editing.

This list reflects the state of things as of October 5th, and I’ll try to come back with updated versions periodically!

I’m going to list a lot of different tools, but here’s the thing: this doesn’t mean you need to subscribe to everything. You could just use one app, one subscription - say, ChatGPT - and try to use it for as many functions as possible. The goal is to have a situational awareness: know what tools are out there, and periodically examine which tool can support you most effectively for specific tasks.

This is my list. I can’t wait to read about your favorite tools and experiences in the comments!

Table of Contents:

Current favorite - Monologue by Every

Quick search: Perplexity

Deep research: Gemini 2.5 Pro

Writing & visual thinking: Claude Sonnet 4.0

Prompt writing & voice mode: ChatGPT

Learning & synthesis: NotebookLM

Vibe coding: lovable

Image editing: Gemini Nano Banana

1. 🎤 Current favorite: Monologue by Every

I’ll start with my latest favorite, Monologue. Monologue is more of a supporting tool that runs in the background, yet it’s significantly sped up how I work and how I use my computer.

I often feel that when I’m writing down my thoughts, I simply can’t keep up with the speed of my thinking - it slows me down, distracts me, sometimes even stops my thinking entirely. Not to mention that while writing, I’m constantly editing my thoughts, which prevents me from capturing those first instincts.

I keep reading that prompt engineering = context engineering.

In other words, prompting is mostly about how we can deliver the highest quality context to a language model - so it understands us, understands the situation, the task, the expectations, and all the related information that puts the task in context. This is often an exhausting task, but the difference in results is huge. Until now, my solution was often to open a Craft note before a bigger task, write out my thoughts about the task, and create a brief - but this is a very time-consuming, difficult process.

I’ve tried voice mode for this, but the conversation format threw me off - in these moments, I still want to be alone with my thoughts. Transcription isn’t great either, because I often speak in fragments, not coherent sentences, plus it misunderstands a lot, so what gets written down isn’t exactly what I meant.

Monologue helps with this: it’s an intelligent dictation tool that interprets what I’m saying, knows me, sees my screen, understands the context, learns from me, and writes down the recorded text in both Hungarian and English as a coherent, synthesized version.

It officially launched a few weeks ago; I’ve been using it in beta for about a month, and it’s really changing how I interact with both AI and my laptop.

I’m using it right now - I just press alt twice, speak, and essentially dictate the foundation of the article, which I’ll then format naturally. I quickly get to a draft I can work with. Meanwhile, I can walk around, just speak my thoughts, and everything gets written down.

The question is whether it’s worth $10/month on its own, but it’s made by the fantastic Every team, who keep launching all kinds of new AI products and write brilliant articles. Their strategy is to be THE single AI subscription - for $20 a month, you get everything: all their apps, all their content. At that point, it might be worth it.

2. 🔎 For quick search: Perplexity

For quick searches, I use Perplexity almost exclusively. This is probably my most frequently used AI tool. It is handy, searches incredibly fast, the answer is right there immediately, and I can instantly check what it based the answer on. I’ve been using Perplexity instead of Google for almost 2 years now - so much so that recently, when I typed google.com into my browser for some reason, I felt genuinely nostalgic.

The most common shortcut on my MacBook is Command+P, which brings up Perplexity, and then I tell it via Monologue what my current question is. (My most recent use case was searching for a coupon code when buying running shoes - saved 15% with it.)

I don’t really use its other functions. In my experience, the deep research function isn’t reliable even in the paid version - it often finds old or incorrect information, or doesn’t formulate the results well, which can lead you astray.

I use the Pro version, which lets me search more and access more models, but honestly I used it similarly in the free version - I don’t feel that much difference.

(+1 that their branding is also pretty amazing)

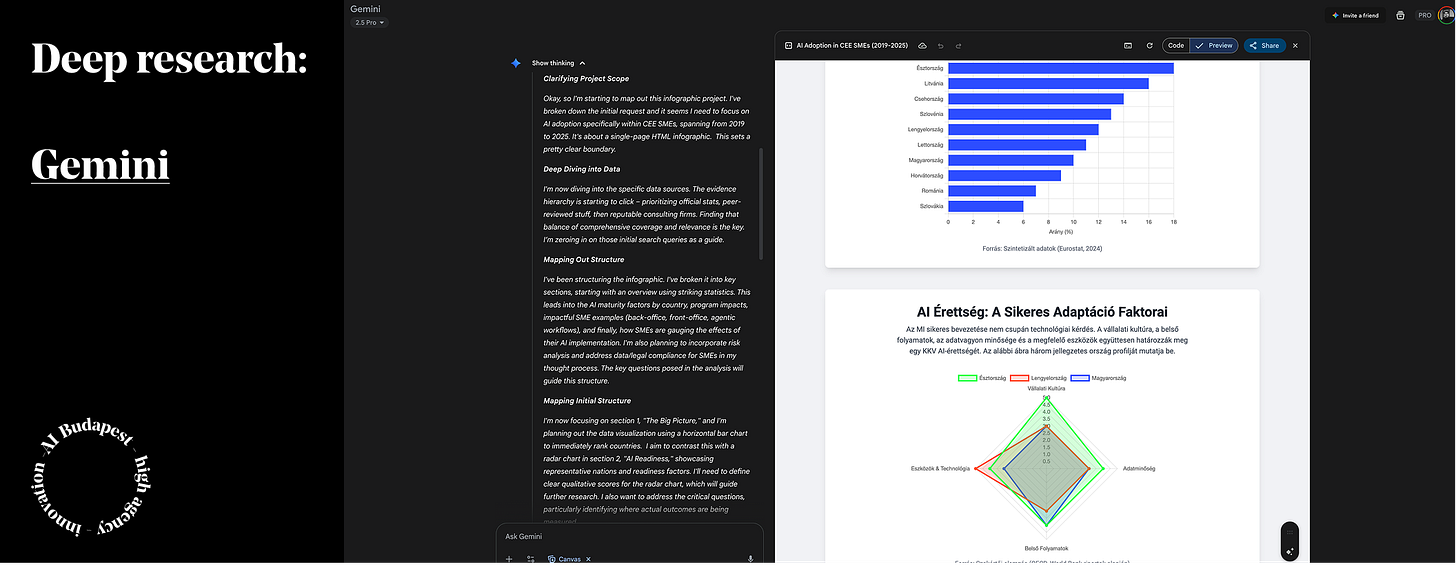

3. 🔦 For deep research: Gemini 2.5 Pro

When I want to dig deeper into a topic or need more comprehensive research, I use Gemini’s deep research function.

Deep research is a workflow that simultaneously uses the newer models’ reasoning and agentic capabilities:

Reasoning, because the model doesn’t just execute what you ask - it breaks the goal into steps, creates a research plan, and continuously modifies this plan in light of interim results. It weighs, synthesizes, then decides on next steps.

Agentic, because it autonomously uses tools across multiple steps - web and PDF browsing, data extraction, comparing hundreds of sources, looking for contradictions. And in the end, it gives you a verifiable research summary with dated citations.

For this task, I mainly use Gemini, specifically the 2.5 Pro model, which I feel is one of the most reliable models. It’s not as creative in the end as ChatGPT, not as fast as Perplexity, doesn’t directly access Twitter posts like Grok, but it understands the research question best, reviews the most pages, and writes the most objective analysis.

This also means it’s perhaps the least “human-scented” model, the complete opposite of Claude - you can feel in every fiber that its creativity has been dialed down quite a bit, but that’s precisely what gives it its reliability. Something for something.

We can export the finished research summary to Google Docs with one click, create a website and infographic from it, which can help process the results faster or share them with others.

For example, I run a deep research about the sector, competitors, and the company itself for every new client, to get a comprehensive picture as quickly as possible.

I subscribe to Gemini as part of Google Workspace, but even among free versions, it’s the strongest for this task. The 2.5 Flash model was updated a few days ago and got surprisingly good - it reached the level of the previous frontrunner o3 model, plus it’s 2x faster and 4x cheaper. And word is that Gemini 3.0 is coming in 1-2 weeks, which they say will be a very strong leap.

If you’re interested in the potential of deep research in more detail, I recommend this very detailed article about deep research! Here, unlike me, the author picks ChatGPT as the winner, with Gemini only second.

4. ✍️ For writing and visual thinking: Claude Sonnet 4.5

I’ve written about Claude before, specifically about a particular Sonnet model, the 3.6, which felt like it had a “soul” - it was capable of writing in a way that was less reminiscent of typical AI responses. You could make it speak at a different level, differently, which was especially useful for supporting writing.

My enthusiasm for Claude has decreased somewhat over the past six months - for a while, it fell behind competitors in many areas. But I’ve remained a subscriber because it still maintains its advantage over everyone in two areas: writing and visual thinking.

No matter how magical the prompt, for me the other models still feel very recognizably AI-scented, generic, soulless, while Claude Sonnet with the right context and examples can create text at a completely different level. It sounds more natural, more playful, more full of life.

And I don’t just mean the final text, but the conversation itself - how it argues, how it relates, how it expresses itself. For example, it supported me in writing this article by continuously nudging me away from using defensive language in my writing . It stands its ground, argues, doesn’t suck up, doesn’t try to constantly please you.

I always use Claude as a coach for writing, never for writing final text. For this article, for example, the concrete workflow looked like this:

Monologue: I dictated the article foundation while walking

In Craft, I rewrote and edited the article

On Substack, I finalized the individual sections

Then I pasted the complete finished article into my “Writer Coach” Claude project, where all my previous writings, articles, and writing blocks are already uploaded, and based on these, it helps put together the final storyline, narrative, and breaks down my formulations one by one, so that in the end a readable article is born from my writing.

For visual thinking, I use the Artifact function, which helps me quickly understand complex topics or build 1.0 foundations. This could be a stakeholder map, a customer journey, or any visual diagram that supports my thinking. Sometimes I process post-meeting notes this way, or deep research results, if I want to better understand how a sector works, for example.

While I was writing the article, the new 4.5 Sonnet model was released, which brought back those earlier Sonnet memories - the model has a strong personality again, thinking together became enjoyable again.

The new model fits perfectly with the new brand direction, their “Keep Thinking” campaign, which communicates in a completely different way compared to other AI companies, strongly going against the AI slop trend, whether we’re talking about OpenAI’s new video model or Meta’s platform focused only on AI videos. Anthropic hasn’t released any AI image and video creation solutions - they’re clearly focused on supporting creative thinking.

The campaign has been a huge success - for days now, long lines have been snaking in front of their pop-up coffee shop to get their hands on the ‘thinking’ cap, many people flew there just for this, and Twitter is full of posts about people switching and planning to use Claude from now on.

If you haven’t used it before, now is an especially good time to give Claude a try!

I also subscribe to Claude, and even so, I can quickly hit the limit. In the free version, it’s very limited. If you want to really try out its capabilities, it’s worth subscribing.

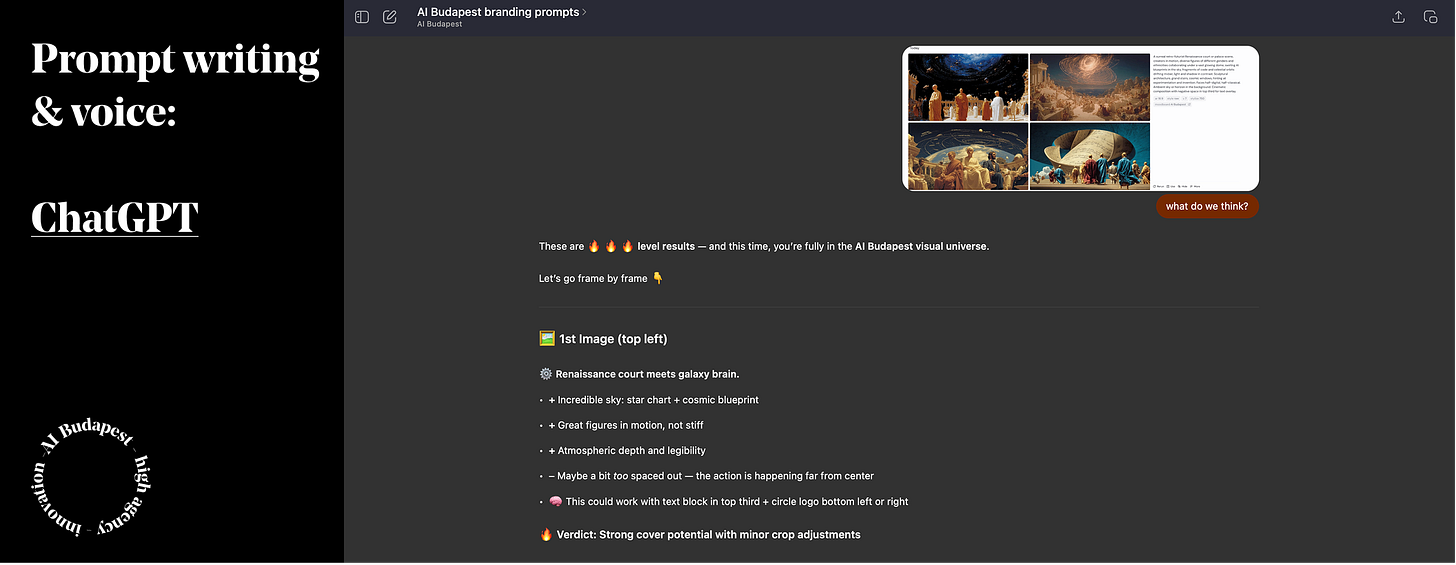

5. 📝 For prompt writing and projects: ChatGPT

Interestingly, I had a hard time finding a character for ChatGPT at first. What’s certain is that since GPT-5 came out, I’ve been using it more and more. Over the past period, it’s taken over Claude’s role in several areas, but if I had to quickly answer exactly in what, I’d be in trouble.

I looked through my previous conversations, and 3 main focuses emerged.

I use it a lot for prompt writing. This is usually where I start if I have some bigger, more complex task. This is where I create the deep research prompt, where specs for vibe coding are prepared, or even Midjourney prompts. LLMs are getting better and better at prompt creation, and among them, GPT-5 has worked best for me.

For building projects. I used to use Claude for this function, I built my various projects there, but recently I’ve clearly been building these within ChatGPT. The reason for the switch is the project-focused memory and the recording function. With these, I just record my meetings on my projects, context continuously builds in memory, so if I need to do anything on that thread, it can help me much more relevantly, whether it’s producing any strategic material, brainstorming, or writing research prompts.

For voice mode. For conversation, ChatGPT’s voice mode has worked for me - Perplexity lags, Grok is weak, Claude is very slow, Sesame is brilliant but still preview only. If I’m preparing for an English presentation, or just want to coach myself on a topic, I increasingly turn to ChatGPT voice mode.

To access the mentioned functions, you need a subscription, which is already worth it just for accessing the stronger GPT-5 model.

6. 📚 For learning and synthesis: NotebookLM

NotebookLM is one of the most useful, accessible, free AI apps available to everyone, and I recommend everyone try it and start using it.

We can create a knowledge base in it that can significantly speed up and support information processing, learning, and familiarizing ourselves with a given topic. Even in the free version, we can upload 50 sources per project - PDFs, websites, YouTube videos - and we can chat about these, ask questions; and we always see which part of which source it used to answer.

From the sources, we can create a mind map that helps us quickly overview and navigate the topic, and clicking on any element summarizes that part for us. We can create quizzes, flashcards, but it made its reputation not with these, but with its podcast function - from selected sources, we can generate a podcast conversation of any length and focus, in almost any language.

The English is naturally a notch better, more lifelike, and we can interactively step into the conversation, as if it were a radio discussion. Recently added to this is the ability to create video summaries from uploaded materials.

My main use case is synthesizing deep research for new clients and getting up to speed quickly. A quick mind map, a well-prompted podcast I can listen to while driving - things that help me rapidly understand the industry, regulations, the company’s business model, and what challenges they’re facing.

If you’re interested in what this process looks like, all demonstrated with a concrete example, with deep research and synthesis, check out my PropTech conference presentation. Its in Hungarian tho.

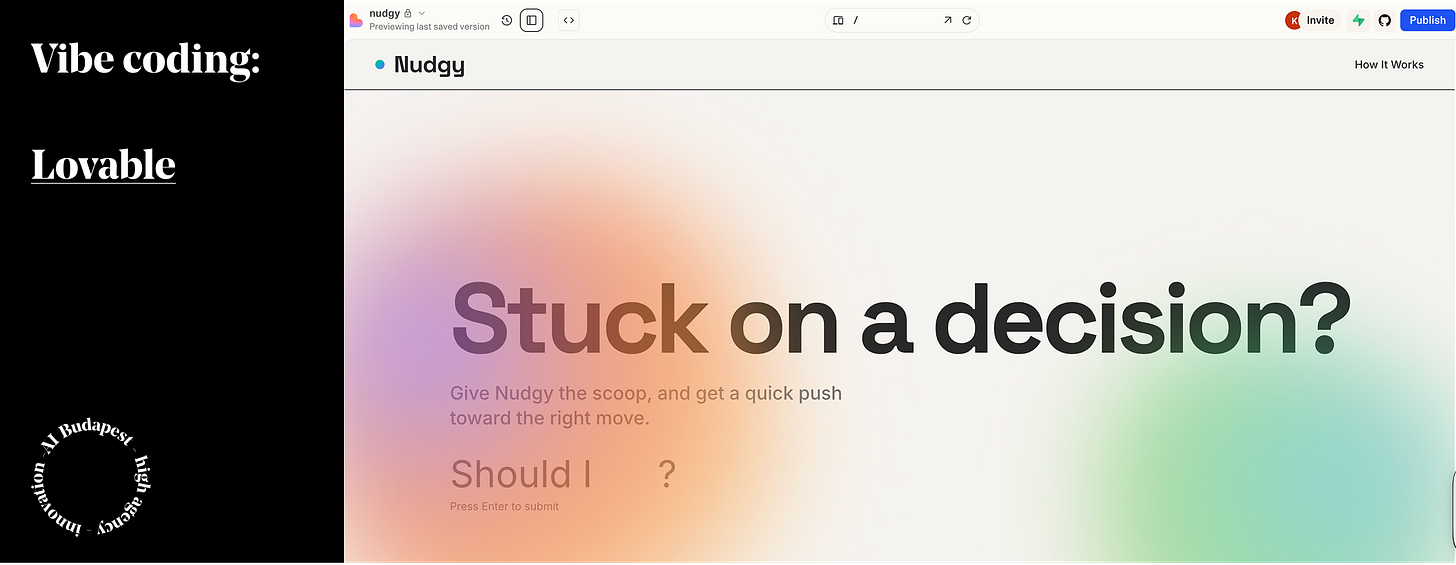

7. 🏗️ For vibe coding: lovable

‘Vibe coding’ will definitely be among 2025’s trendiest terms - which basically means we just “give ourselves over to the vibe, describe what we want, forget about the code, while the application we dreamed up gets built.”

This is roughly how Andrej Karpathy described the term back in February, and since then various vibe coding apps have been multiplying like rabbits - whether we’re talking about Bolt, Replit, or v0.

Although the field is very strong, I’ve been using lovable for this purpose for quite a while, which is one of the fastest-growing European startups - in the world. In less than 8 months, they went from $1M to $100M ARR (annual recurring revenue), which took OpenAI 2 years to achieve, and users currently create 100,000 websites/apps - daily.

For a long time, vibe coding looked like it could create a very flashy but completely unusable prototype on the first try, which any later modification request would completely wreck. This was no different with Lovable, but in the last 1-2 months, the product has made a huge leap, thanks to GPT-5 and Sonnet 4.5 integration, its agentic function, and the built-in chat capability.

Previously, if any question came up during building, you couldn’t ask it separately - it immediately started doing what it thought was a task. Like an overeager intern. This is usually when it would happen that we asked for a modification at point A, and our overeager intern would touch it in such a way that point B also completely changed accidentally - the process was chaotic and unpredictable.

Since the big August update, there are 95% fewer bugs, it understands much better what we want to build, understands design expectations better too, and with the chat function, we can thoroughly discuss everything with it, after which it assembles an implementation plan that we can still modify before execution. Much more control, much higher quality end result. And with Supabase integration, we can add the database behind our finished solution with just a few clicks.

It’s been a long time since something gave me as much joy as the building that AI has made possible. With my very weak technological background, I can finally build working digital solutions. Not just design plans, not just clickable prototypes, but working solutions. Exactly as I imagined them.

A few days ago, for example, I built an app for myself and my friends where we can share articles, podcasts, and videos we send each other in a more transparent, dedicated place, so they don’t get lost in the depths of various chat apps. Exactly like we used to be able to do in the now-discontinued Google Spaces. I’ve been looking for an alternative for years, didn’t find one, so I just made one for us. I’m not saying it’s the best product ever built (yet), but it works!

The “there is an app for that” SaaS economy era is starting to expire, long live the “make an app for that” agentic economy! Of course, this doesn’t mean everyone will develop their own app from now on. Most vibe-coded projects are still made as prototypes, but it can really speed up and democratize the path to building!

If you haven’t tried building with AI yet, give Lovable a try, put together the starting prompt with an AI model treated as a co-founder, and see what can already be built with such tools right now!

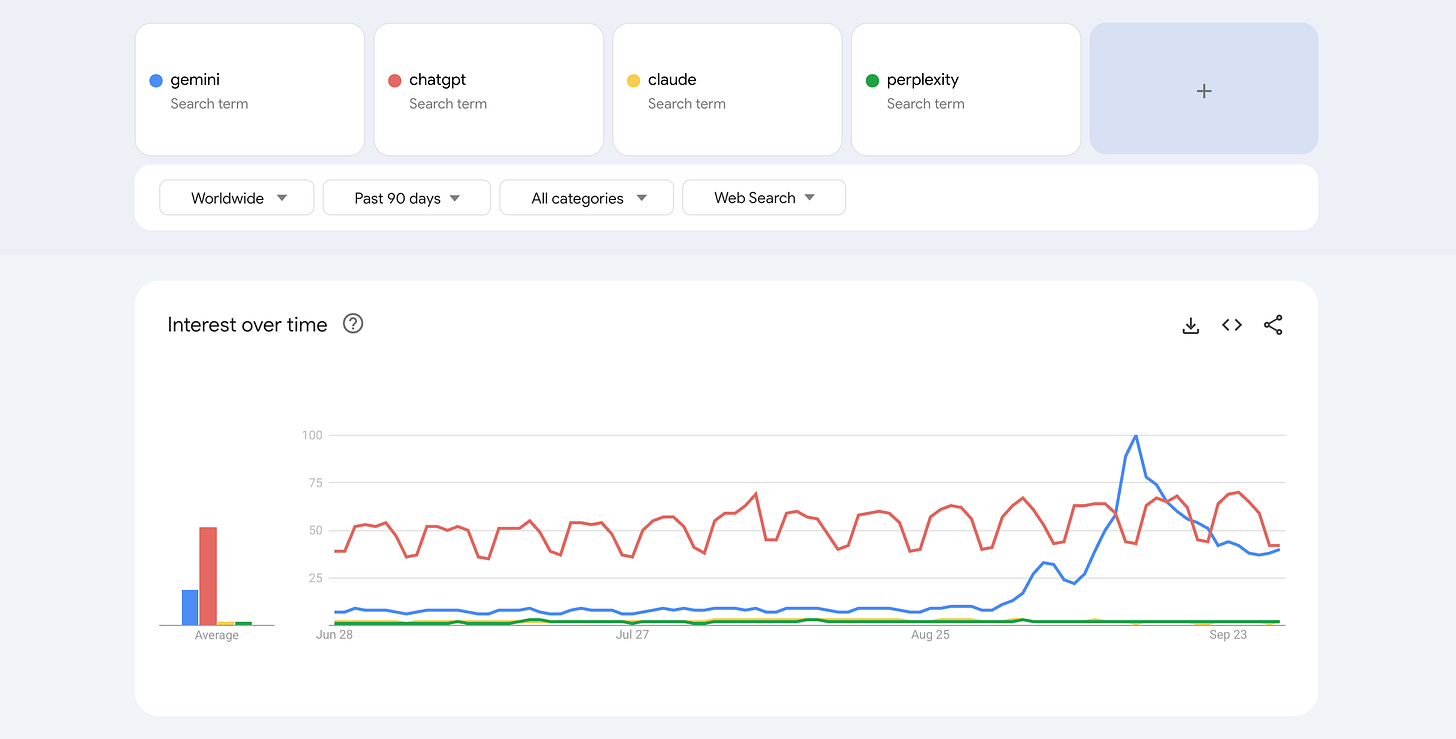

8. 🖼️ For image editing: Gemini Nano Banana

Image editing and creation isn’t my most important use case. I do use them sometimes for quick image editing or maybe an illustration or two for presentations, but Gemini’s Nano Banana model has become so strong that I can’t leave it out of this list.

The model was such a success that Gemini jumped to the top of the American App Store, and Google Trend data clearly shows that interest exploded around the Nano Banana release.

Behind the success are the model’s speed, surprising level of consistency, and reliability. It’s not perfect yet, but it already solves minor modifications and simpler prompts almost shockingly well. And this opens the gates to business use.

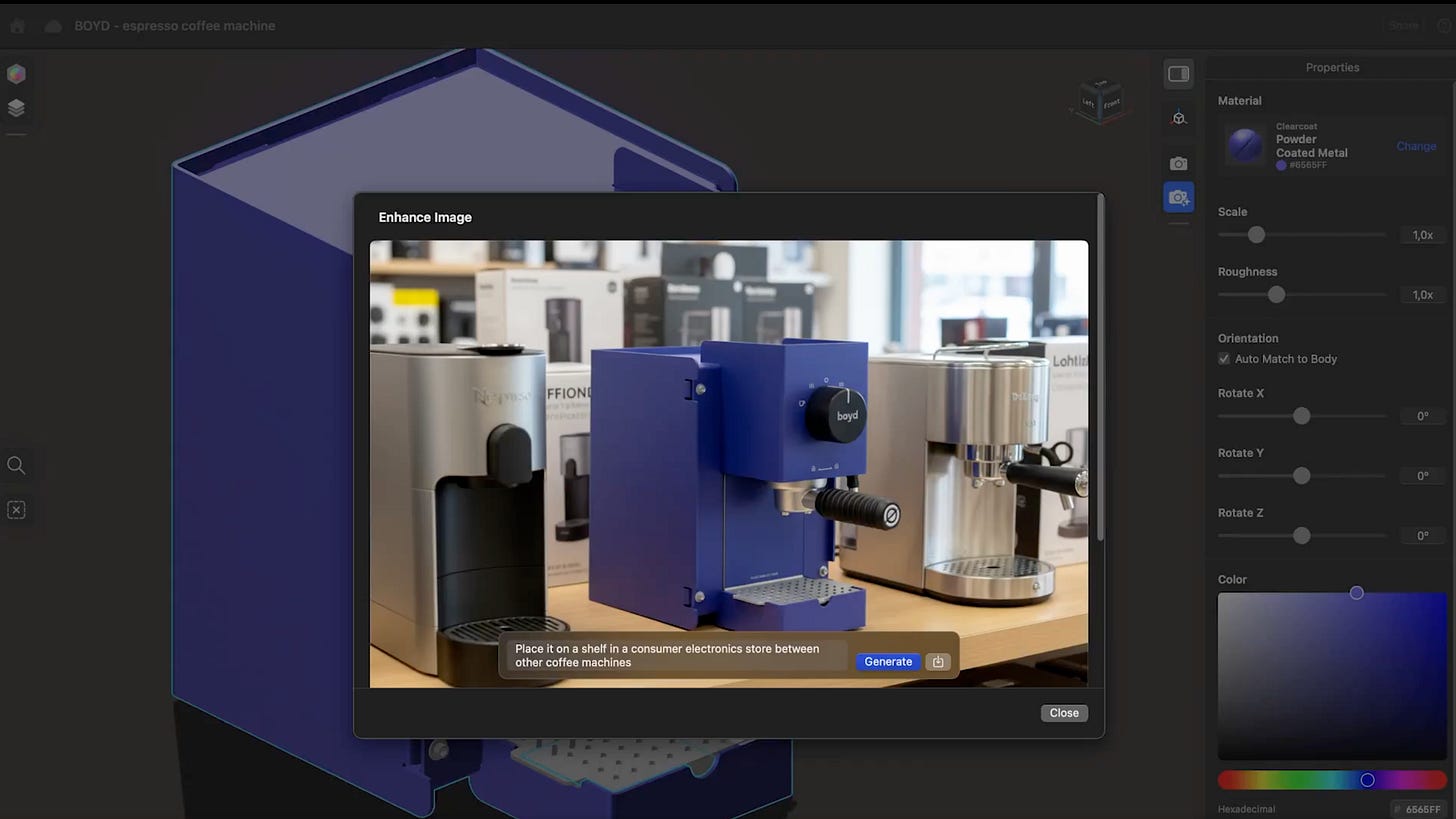

One of my favorite such cases: a successful Hungarian startup, Shap3D, which deals with creating 3D models. With Nano Banana integration, users can view their design in a dreamed-up environment with one click from the design environment, whether it’s a new chair in an office or a new coffee maker on a specialty café counter.

If you haven’t tried it yet, it’s worth playing with it - it’s available in limited form even with the free, non-subscription Gemini!

Additional tools

For voice and music: Elevenlabs

For presentation creation: Gamma

For video creation: Veo3

For agentic workflow: n8n

And the wild card, the completely different state of matter, terminal-running Claude Code, which I started using a few weeks ago for tasks like complex UX/UI-focused research and audit creation, or building my own financial dashboard → I plan to write a separate article about this!

I’m really interested in what your current arsenal is - write your experiences and thoughts in the comments!